How I helped fix sleep-wake hangs on Linux with AMD GPUs

on

I dual-boot my desktop between Windows and Linux. Over the past few years, Linux would often crash when I tried to sleep my computer with high RAM usage. Upon waking it would show a black screen with moving cursor, or enter a "vegetative" state with no image on-screen, only responding to magic SysRq or a hard reset. I traced this behavior to an amdgpu driver power/memory management bug, which took over a year to brainstorm and implement solutions for.

TL;DR: The bug is fixed in agd5f/linux, and the change should be out in stable kernel 6.14. It has been reverted due to possible deadlocks.

Diagnosing the problem

I started debugging this issue in 2023-09. My setup was a Gigabyte B550M DS3H motherboard with AMD RX 570 GPU and 1TB Kingston A2000 NVMe SSD, running Arch Linux with systemd-boot and Linux 6.4.

The first thing I do after a system crash is to check the journals. For example, journalctl --system -b -1 will print system logs from the previous boot (dmesg and system services, excluding logs from my user account's apps).

The output showed that some sleep attempts had out-of-memory (OOM) errors in kernel code under amdgpu_device_suspend, and it took one or more failed attempts before the system crashed. Though oftentimes journalctl would print no logs whatsoever of the broken system waking up, terminating at:

Aug 30 12:47:01 ryzen systemd-sleep[41722]: Entering sleep state 'suspend'...

Aug 30 12:47:01 ryzen kernel: PM: suspend entry (deep)At one point after my computer attempted to sleep, it entered a "undead" state where the computer woke up enough to show KDE's lock screen clock updating in real time, but locked up if I tried to log in or interact beyond a REISUB reboot. Terrifyingly, after rebooting the PC and checking the journals, they stopped at Entering sleep state 'suspend'... and contained no record of waking up and loading KDE's lock screen. I concluded that the NVMe storage driver failed to reinitialize during system wakeup, causing the system to freeze and logs to stop being written.

I suspected that Linux was telling my NVMe drive to enter a power-saving APST mode, but my drive would instead stop responding to requests permanently. The Arch wiki suggested that I disable APST by adding kernel parameter nvme_core.default_ps_max_latency_us=0 and enable software IOMMU using iommu=soft, but this did not solve my problem. Later I installed a SSD firmware upgrade that fixed APST handling (bricking not covered by warranty 😉), and upgraded to a 2TB boot SSD, but neither step helped.

- One contributing factor was that systemd would try multiple sleep modes back to back (bug report). If the first sleep attempt failed due to OOM, subsequent attempts would generate noise in system logs and corrupt the kernel further. I turned this off on my system by editing

/etc/systemd/sleep.confand addingSuspendState=mem; this simplified debugging but did not solve the underlying problem. - I also tried

echo 1 > /sys/power/pm_traceto check where suspend failed, which had the side effect of disabling asynchronous suspend (which suspended multiple devices in parallel). I noticed that Linux would recover from amdgpu suspend failures rather than entering a full system hang.pm_tracestores sleep-wake progress into a computer's system time (docs), so after a failed sleep you can find which operation hung. At one point I rebooted or woke up my computer, only to find that the time was decades off!

Next I enabled the systemd debug shell, which allowed me to run commands on a broken system even when I was unable to unlock KDE or log into a TTY. I added a systemd.debug_shell kernel parameter to my systemd-boot config, though the Fedora wiki says you can also run systemctl enable debug-shell to enable it through systemd itself. Afterwards pressing Ctrl-Alt-F9 would spawn a root shell.

Because the kernel crash sometimes broke the motherboard's USB controller and keyboards, preventing me from typing into the debug shell, I dug a PS/2 keyboard out of a dusty closet and plugged it into my system (only safe when the PC is off!). This helped me navigate the debug shell, but was largely obsoleted by the serial console I set up later, which doesn't require a working display output.

After looking through my crash logs, I noticed that the crashes generally happened in amdgpu's TTM buffer eviction (amdgpu_device_evict_resources() → amdgpu_ttm_evict_resources()). To learn what this meant and report my findings, I looked on amdgpu's Gitlab bug tracker for issues related to sleep-wake crashing. I found a bug report about crashing under high memory usage, which reported that "evict" means copying VRAM to system ram (or system RAM to swap).

Through some digging, I found that when a desktop enters S3 sleep, the system cuts power to PCIe GPUs, causing their VRAM chips to lose data. To preserve this data, GPU drivers copy VRAM in use to system RAM before the system sleeps, then restore it after the system wakes. However the Linux amdgpu driver has a bug where, if there is not enough free RAM to store all VRAM in use, the system will run out of memory and crash, instead of moving RAM to disk-based swap.

- If Linux OOMs during suspend, it will cancel sleeping and attempt to restart devices. However some drivers may break due to the aborted sleep, or themselves OOM during suspend or resume. This is more likely with asynchronous suspend enabled.

- Alternatively Linux may successfully suspend, but OOM during resume while starting up devices.

Upstream debugging

I thought that in order to allow swapping to disk, you'd have to evict VRAM to system RAM (while swapping out system RAM if it fills up) before suspending disk-based storage. After we discussed on the bug report, Mario Limonciello suggested that I enable /sys/power/pm_print_times and /sys/power/pm_debug_messages (docs) and check the logs from sleeping. The resulting logs showed that during sleep, the NVMe and amdgpu drivers entered pci_pm_suspend in parallel.

I started looking for ways to move GPU suspend before SSD suspend, and found a mechanism for ordering device suspend. However it appeared built more for synchronizing tightly-coupled peripherals than suspending every GPU before any system disk. Mario instead suggested evicting VRAM during Linux suspend's prepare phase (before disks are suspended), and wrote some kernel patches to move VRAM eviction there.

As an overview of how the suspend process fits together, I've written a flowchart of Linux's suspend control flow (source code, drivers):

enter_state(state) { // kernel/power/suspend.c

suspend_prepare(state); // notify drivers

...

pm_restrict_gfp_mask(); // disable swap

suspend_devices_and_enter(state) → dpm_suspend_start() { // drivers/base/power/main.c

dpm_prepare() {

// Call device_prepare() → callback on each device in series.

...amdgpu_pmops_prepare();

}

dpm_suspend() {

// Call async_suspend() → device_suspend() in parallel,

// and/or device_suspend() → callback in series.

...amdgpu_pmops_suspend();

}

}

}The patch moved VRAM eviction (and some other large allocations) from dpm_suspend() (when the SSD is being turned off) to dpm_prepare() earlier. This way, if amdgpu runs out of memory while backing up VRAM, it will abort the suspend before entering dpm_suspend() and attempting to suspend other devices. Previously, backing up VRAM during/after suspending other devices could cause them to crash from OOM themselves, or fail to resume from a failed suspend.

Unfortunately suspending on high RAM usage would still fail to complete, even though the disks were still powered on! The amdgpu developers initially did not think that swap was disabled, but I discovered that the call to pm_restrict_gfp_mask() disabled swap before either dpm_prepare() or dpm_suspend() was called. When backing up VRAM during dpm_prepare(), amdgpu_ttm_evict_resources() often ran out of contiguous memory, causing dpm_prepare() to fail and abandoning the sleep attempt.

Worse yet, if amdgpu_ttm_evict_resources() managed to fit all VRAM into system RAM, but there was not enough free memory to handle later allocations, then drivers would hit OOM and crash during sleep or wake. This meant that if you used up just enough RAM, you could still crash your system even with this patch. Nonetheless, the amdgpu developers considered Mario's kernel patch to be an improvement over backing up VRAM during dpm_suspend(), and submitted the patch to kernel review.

- When testing the patch, "daqiu li" reported extremely slow suspend (100 seconds) and suggested the use of

__GFP_NORETRYfor allocation. I did not experience the issue or how to evaluate the suggestion, and the amdgpu developers did not respond either.

Along the way, Mario suggested I could hook up a serial console to my computer to pull logs off the system, even when the display and SSD were down. I found an internal serial header on my motherboard, bought a motherboard-to-DB9-bracket adapter (warning, there's two layouts of motherboard connector, and the header pin numbering differs from the port pins), and hooked my motherboard up to a serial-to-USB cable I plugged into my always-on server laptop. This allowed my laptop to continually capture data sent over the serial port, acting as a persistent serial console I could check when my PC crashed.

On my desktop, I set up systemd-boot to pass additional parameters no_console_suspend console=tty0 console=ttyS0,115200 loglevel=8 (docs) to the kernel.1 On my laptop, I opened a terminal and ran sudo minicom --device /dev/ttyUSB0 --baudrate 115200 to monitor the computer over serial. In addition to saving logs, I could use the serial console to run commands on the system. Text transfer was slower than a direct getty TTY or SSH (a long wall of text could block the terminal for seconds), and it ran into issues with colors and screen size (breaking htop and fish shell completion), but it could often survive a system crash that broke display and networking.

Intermission: Debugging crashes with Ghidra

While testing swapping during prepare(), I got my usual OOM errors during or after backing up VRAM, but one crash stood out to me. At one point, amdgpu crashed with error BUG: unable to handle page fault for address: fffffffffffffffc, attempting to dereference a near-null pointer. To me this looked like a state-handling bug caused by failing to test a pointer for null.

The crash log mentioned that the null pointer dereference occurred at dm_resume+0x200, but did not provide a line number corresponding to the source code I had. So I did the natural thing: I saved and extracted the amdgpu.ko kernel module, decompiled it in Ghidra, and mapped the location of the crash in dm_resume to the corresponding lines in the kernel source.

While looking at the code, I found that the macro for_each_new_crtc_in_state(dm->cached_state, crtc, new_crtc_state, i) was crashing when it loaded pointer dm->cached_state into register RSI, then loaded field ->dev = [RSI + 0x8]. The crash dump said that RSI was fffffffffffffff4 rather than a valid pointer, then the code tried loading a field at offset 8 and page-faulted at address fffffffffffffffc (= RSI + 8).

Why was dm->cached_state storing -12 instead of a pointer? Most likely this happened because earlier during suspend, dm_suspend() assigned dm.cached_state = drm_atomic_helper_suspend(adev_to_drm(adev)). The callee drm_atomic_helper_suspend() could return either a valid pointer, or ERR_PTR(err) which encoded errors as negative pointers. But the caller function assigned the return value directly to a pointer which gets dereferenced upon resume, instead of testing the return value for an error.

In this case, I think that drm_atomic_helper_suspend() ran out of memory, printed and/or returned -ENOMEM (-12), and the amdgpu suspend code interpreted it as a pointer and unsafely dereferenced it upon waking. Mario fixed this issue by adding code to check for a failed return value and abort the suspend instead.

Abandoned: Allow swapping during prepare()?

At this time, high RAM usage still caused sleeping to fail. This happens because amdgpu backs up VRAM during dpm_prepare(), which runs after pm_restrict_gfp_mask() disables swapping to disk. I wanted to fix sleeping under high RAM usage by disabling swap after dpm_prepare() backs up VRAM, but before dpm_suspend() turns off the disk. Both dpm_…() functions were called within dpm_suspend_start(), so this required moving pm_restrict_gfp_mask() deeper into the call hierarchy.

enter_state() // kernel/power/suspend.c

// removed pm_restrict_gfp_mask() call

→ suspend_devices_and_enter() → dpm_suspend_start(PMSG_SUSPEND) { // drivers/base/power/main.c

dpm_prepare() {

// backup VRAM, swap RAM to SSD

}

pm_restrict_gfp_mask(); // disable swap

dpm_suspend() {

// turn off SSD

}

}Unfortunately this posed some practical challenges:

pm_restrict_gfp_mask()is declared inkernel/power/power.h(in thekernel/core folder), and called inkernel/power/suspend.c.- I wanted to call it in

dpm_suspend_start()in filedrivers/base/power/main.c, in thedrivers/subsystem. This file does not include headers fromkernel/power/, but only includes<linux/...h>frominclude/, and"../base.h"etc. fromdrivers/base/power/.

As a hack to allow drivers/base/power/main.c to access likely-private kernel core APIs, I edited it and added #include <../kernel/power/power.h> (from include/). If I wanted to upstream my change, I'd have to convince the power management and driver maintainer (both files were maintained by Rafael J. Wysocki) that this memory management API should be accessed by the driver subsystem.

On top of this, there were more correctness challenges to disabling swap during dpm_suspend_start(). For example, hybrid sleep calls pm_restrict_gfp_mask() while saving a system image, then leaves swap disabled and calls suspend_devices_and_enter() → dpm_suspend_start() while expecting the function will not call pm_restrict_gfp_mask() again (which would throw a warning and prevent pm_restore_gfp_mask() from reenabling swap). Hybrid sleep initiated through the less-used userspace ioctl API also leaves swap disabled after saving a system image.

To "handle" this case, I added a function bool pm_gfp_mask_restricted(void), and modified dpm_suspend_start() to not call pm_restrict_gfp_mask() if it was already active.

- Oddly hybrid sleep calls

dpm_prepare() … dpm_suspend()twice. It calls these functions to power down devices, saves a system image to disk, wakes the devices back up, then enters regular sleep mode throughsuspend_devices_and_enter(). (It only callspm_restrict_gfp_mask()andpm_restore_gfp_mask()once.) - I did not look into how drivers handle hibernation (

dpm_prepare(PMSG_FREEZE)) and sleep (dpm_suspend_start(PMSG_SUSPEND) → dpm_prepare(PMSG_SUSPEND)) differently.

In my testing, this reduced the rate of failed or crashed suspends, but did not fix crashing entirely. After a system lockup I checked my serial console, but found to my dismay I hadn't restarted my serial logger after I rebooted my laptop, leaving me with no clue about what caused the crash. I decided against trying to upstream an "improvement" to power management, that required fragile changes to core power management infrastructure (outside of the amdgpu driver), and didn't even fully solve the problem.

Sidenote: Corrupted consoles on shutdown

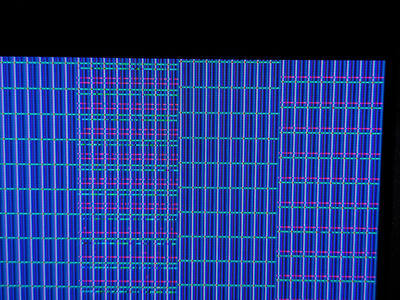

While shutting down my machine, I've been intermittently getting corrupted screens filled with 8x16 blocks of colors. This pattern would fill the space around the shutdown console (which is the resolution of the smallest connected screen), though the console itself was missing since I had redirected it to serial.1 When this happened, I usually found a kernel error from dc_set_power_state during a previous (successful) sleep attempt, pointing to line ASSERT(dc->current_state->stream_count == 0); in the amdgpu driver.

I've reported the bug a few times in the thread, but have not made a separate bug report for this issue (because the symptoms are minor enough to be ignored). Neither I nor the amdgpu maintainers have determined why this happens.

Workaround: Evicting VRAM in userspace

A year later in 2024-10, I finished a session of SuperTuxKart, closed the game, and put my computer to sleep. When I woke my computer I was greeted by a black screen. I logged into the serial console and ran sudo rmmod -f amdgpu trying to reset the driver, but triggered a kernel panic instead. (I've sometimes managed to recover from an amdgpu crash by instead using systemctl suspend to power-cycle the GPU and driver.)

Reviewing the logs revealed that one failed sleep attempt OOM'd in amdgpu during dpm_prepare(), and the next attempt used up enough memory that during resume, amdgpu's bw_calcs() crashed when allocating memory. This sent the amdgpu driver into an inconsistent state, resulting in a black screen and a stream of errors in the journal.

At this point I had the idea to copy NVIDIA's userspace VRAM backup system. NVIDIA faced the same issue of being unable to save large amounts of VRAM to RAM when swap was disabled2, and wrote scripts that systemd runs before/after it tells the Linux kernel to sleep. Prior to sleeping, nvidia-suspend.service writes to /proc/driver/nvidia/suspend, telling the driver to switch to a blank TTY and backup VRAM to a tmpfile. After resuming, nvidia-resume.service tells the driver to restore VRAM from the file and return to the previous session.

I forked NVIDIA's system services and built an amdgpu-sleep package for Arch Linux. Prior to system sleep, my script reads the contents of file /sys/kernel/debug/dri/1/amdgpu_evict_vram, a debugging endpoint that tells amdgpu to save all VRAM into system RAM. This way every time the system tried to sleep, systemd would wait for the GPU's VRAM to be evicted (moving system RAM into swap if needed) before initiating a kernel suspend.

This workaround was partly successful. When I slept my computer from the desktop, the script could quickly copy all VRAM to memory (swapping out RAM to make room for VRAM) before entering the kernel to sleep the system. But when I slept my computer with multiple 3D apps running, the apps would continue rendering frames and pulling VRAM back onto the GPU, while the amdgpu_evict_vram callback was trying to move VRAM to system RAM! This tug-of-war livelock continued for over 70 seconds before amdgpu_evict_vram gave up on trying to move all VRAM to system memory, and systemd began the kernel suspend process (which froze userspace processes and successfully evicted VRAM).

In any case, I left the scripts enabled on my computer, since they caused livelock less often than disabling the scripts caused kernel-level crashes.

Solution: Power management notifiers

In 2024-11, Mario asked users to test a patch that promised to allow evicting while swap was still active. I looked into the patch sources and found that it called a function register_pm_notifier() on a callback struct; this function belongs to Linux's power management notifier API. The callback in the patch listens to PM_HIBERNATION_PREPARE and PM_SUSPEND_PREPARE messages, and calls amdgpu_device_evict_resources() to evict VRAM.

When is PM_SUSPEND_PREPARE issued during the suspend process? Reading the code, enter_state() → suspend_prepare() calls pm_notifier_call_chain_robust(PM_SUSPEND_PREPARE, PM_POST_SUSPEND). This issues PM_SUSPEND_PREPARE to every driver with a notifier callback (including amdgpu), and if any failed it would abort sleep by issuing PM_POST_SUSPEND to any driver that had already prepared for sleep.

We can revise the flowchart from before:

enter_state(state) { // kernel/power/suspend.c

suspend_prepare(state) {

pm_notifier_call_chain_robust(PM_SUSPEND_PREPARE, PM_POST_SUSPEND) { // notify drivers

...amdgpu_device_pm_notifier() → amdgpu_device_evict_resources();

}

}

...

pm_restrict_gfp_mask(); // disable swap

suspend_devices_and_enter(state) → dpm_suspend_start() { // drivers/base/power/main.c

dpm_prepare()...

dpm_suspend()...

}

}Evicting VRAM during PM_SUSPEND_PREPARE allows amdgpu to evict VRAM to system RAM before swapping is disabled or disks are frozen. It's interesting that neither Mario nor the other amdgpu maintainers thought to use this alternative hook until a year after I initially investigated the issue; I was not aware that this notifier API existed until then.

To test the change, I built a custom kernel with the modified amdgpu driver, as rebuilding only the driver failed unlike last year. After rebooting, I was able to suspend multiple times under high RAM and VRAM usage with no errors. The only issue I noticed was a few seconds of audio looping, as amdgpu tried to back up VRAM before PipeWire or the kernel silenced the speakers (and PipeWire does not configure the ALSA output to stop playing when it runs out of data to be played).

I do not know that this patch will always fix suspend, since my previous "allow swapping during prepare()" patch still hung the system during a sleep attempt. But since this patch was much cleaner and worked in all cases I had tested so far, I thought it was the current best step to fixing the bug. After a few rounds of code review, this change was merged into the amdgpu tree, finally resolving the bug after over a year of attempts.

Sidenote: Alternative userspace sleep-wake workarounds, memreserver

Reading the patch message out of curiosity, I found a separate bug report filed against AMD's ROCm compute drivers in 2024-10 (my bug report was against 3D graphics). This issue described the same issue (OOM evicting VRAM on suspend), but the replies linked to yet another amdgpu workaround known as memreserver, developed from 2020 to 2023. Like my userspace eviction attempt, this program is also a systemd service which runs a userspace program prior to system sleep.

To make room for VRAM, memreserver allocates system RAM based on used VRAM plus 1 gigabyte, then fills the RAM with 0xFF bytes and mlocks the memory (so none of it is swapped out). Afterwards it quits to free up enough physical RAM to fit allocated VRAM. I have not tested this program's functionality or performance, but suspect that filling gigabytes of RAM with dummy bytes may be unnecessary or slow (though it's obviously better than a system crash).

Conclusion

This took over a year of debugging and multiple attempts by many people to fix. It should be hitting stable Linux kernel 6.14 in 2025 (unless it gets pushed or backported to 6.13), and will be fanning out to distributions as they pick up new kernels in their update cycles.

Update (2025-06)

Unfortunately the fix had to be reverted due to a bug it introduced. If you suspend the system without systemd freezing all userspace processes first, then the kernel tries to evict VRAM to system RAM before the kernel freezes processes itself. If a 3D program is pulling VRAM back to the GPU while this is happening, this can deadlock the suspend eviction process, much like when I tried evicting VRAM in userspace.

The Linux PM maintainer did suggest moving the pm_restrict_gfp_mask() call "into" suspend_devices_and_enter(), which matches my earlier experiment of allowing swapping during prepare(). However I'm not planning to implement and submit this myself, as I've moved my AMD GPU to an older computer with a slower CPU and 8 GB of memory, and I don't want to run kernel builds on it.

In a delicious irony, I've upgraded my main computer to an Intel Arc B570... which has the same issue, but now with 10 GB of VRAM! I've reported it to the bug tracker, but don't know if it can be fixed. Perhaps I might take another stab at moving pm_restrict_gfp_mask() to later...

Footnotes

While initially debugging this issue, I redirected the system log to serial console only, using command line no_console_suspend console=ttyS0,115200 loglevel=8. I believe I did this to prevent system logs from popping up while I was typing commands on systemd's debug shell. An unintended side effect is that during startup and shutdown, no logs were visible on screen. My current command line avoids this problem.

In fact, I had to uninstall the nvidia proprietary drivers from an Ubuntu machine because it was hanging on sleep, reverting to nouveau and its single-digit FPS video playback. The user didn't notice or care. I did.